Hello, Codeforces!

In this blog I will describe yet another useless unconventional data structure. This time, I will show how to maintain an extended suffix array (suffix array, inverse of suffix array, and $$$lcp$$$ array) while being able to add or remove some character at the beginning of the string in $$$\mathcal{O}(\log n)$$$ amortized time.

It can be used to overkill solve problem M from 2020-2021 ACM-ICPC Latin American Regionals, which my team couldn't solve during the contest. I learned it from here (Chinese), but it is not shown how to maintain the $$$lcp$$$ array.

Here is the whole code of the data structure. To read this blog, I recommend you ignore this code for now, but use it if you have questions about helper functions, constructors, etc, since I will pass over some implementation details in the explanations.

1. Definitions

I will assume the reader is familiar with the definition of suffix array ($$$SA$$$) and $$$lcp$$$ array. If that is not the case, I recommend learning it from Codeforces EDU.

Here, the $$$i$$$-th position of the $$$lcp$$$ array will denote the longest common prefix between $$$SA[i]$$$ and $$$SA[i-1]$$$. Also, $$$lcp[0] = 0$$$. The inverse suffix array ($$$ISA$$$), or rank of a suffix says what position that suffix is at in the $$$SA$$$. That is, $$$ISA[SA[i]] = i$$$.

In the data structure, since we add characters at the front of the string, we will use a reversed index representation of each suffix. For example, the suffix of index 2 of $$$baabac$$$ is $$$bac$$$. We will use a function $$$\texttt{mirror}$$$ to go from the standard index representation to the reversed one, and vice versa.

2. Binary search tree

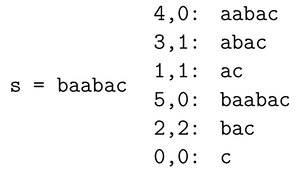

Let's represent the $$$SA$$$ using a binary search tree, such that the in-order traversal of the tree gives the $$$SA$$$. For example, take the $$$SA$$$ of $$$baabac$$$ below. For each suffix, its (reversed) index and $$$lcp$$$ are represented.

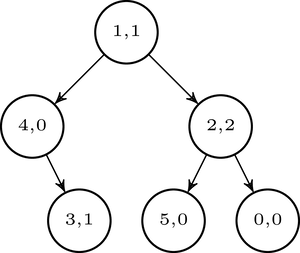

We could represent this using the following binary search tree.

Additionally, we will store an array $$$tag$$$, such that $$$tag[i] < tag[j]$$$ if, and only if, suffix $$$i$$$ comes before suffix $$$j$$$ in the $$$SA$$$ (equivalently, in the binary search tree). Using this, we can compare two suffixes lexicographically in constant time. This is pivotal to make the complexity of the operations $$$\mathcal{O}(\log n)$$$ amortized.

Because we want to maintain the $$$tag$$$ array, we will not use a binary search tree that does rotations, since it not trivial how to update the $$$tag$$$ values during the rebalancing. Instead, we will use a binary search tree called Amortized weight-balanced trees (see below for references). The idea of these trees is that, at any point, every subtree will be $$$\alpha$$$-balanced for some $$$0.5 < \alpha < 1$$$, which means that for every subtree rooted at node $$$x$$$, $$$\max(\text{size}(x.left), \text{size}(x.right)) \leq \alpha \cdot \text{size}(x)$$$. It turns out that if we insert and delete using trivial binary search tree algorithms and, after every change, for every node that is not $$$\alpha$$$-balanced we simply rebuild the entire subtree rooted at that node so that it becomes as balanced as possible, the height of the tree and the amortized cost of inserting and deleting is $$$\mathcal{O}(\log_{\frac{1}{\alpha}} n)$$$. Proving this is beyond the scope of this blog. In the code, I use $$$\alpha = 2/3$$$.

This rebuilding scheme makes it easy to maintain the $$$tag$$$ values by having a large enough interval of possible values (I use $$$[0, 10^{18}]$$$) and recursively dividing it in half (refer to the code for more details).

Given that we have a binary search tree representing the $$$SA$$$ and $$$lcp$$$ array with logarithmic height, how do we query for $$$SA$$$, $$$lcp$$$ and $$$ISA$$$? If we maintain the subtree sizes of the tree, we simply go down the tree to get the $$$i$$$-th smallest node and so we get $$$SA[i]$$$ and $$$lcp[i]$$$ in $$$\mathcal{O}(\log n)$$$, if $$$n$$$ is the size of the string. To get $$$ISA[i]$$$, we go down the tree, counting how many nodes in the tree have $$$tag$$$ value smaller than $$$tag[i]$$$.

Finally, we can also use the tree to query for the $$$lcp$$$ between two arbitrary suffixes $$$i$$$ and $$$j$$$ (not necessarily consecutive in the $$$SA$$$). We know that this is equal to the minimum between the $$$lcp$$$ values in the range defined by the two suffixes. So we just need to store the minimum over all $$$lcp$$$ values on every subtree, and query for the minimum over all nodes that have $$$tag$$$ value in the range $$$(tag[i], tag[j]]$$$, assuming $$$tag[i] < tag[j]$$$. This can be done by going down the tree similar to a segment tree, in $$$\mathcal{O}(\log n)$$$ time.

3. Adding a character

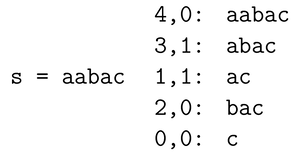

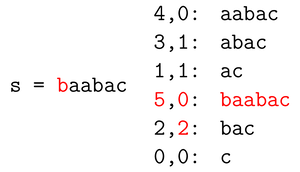

Since we add or remove characters at the front of the string, we only add or remove a single suffix of the string (and, equivalently, a single node in the binary search tree), and the previous suffixes remain unchanged and maintain their relative order. Below we see represented in red what changes in the $$$SA$$$ and $$$lcp$$$ after adding $$$b$$$ to $$$aabac$$$.

$$$\hspace{30pt}$$$

$$$\hspace{30pt}$$$

So, to update the structure with the new suffix, we just need to find the position where the new suffix will end up at, its $$$lcp$$$ value and we might need to update the $$$lcp$$$ value of the suffix that comes just after the added suffix.

3.1. Comparing the new suffix with another suffix

If we can compare the new suffix with any other suffix, we can use this to go down the tree and figure out the position we need to insert the new suffix. To make this comparison, we use the following trick: we compare the first character of the suffixes. If they are different, we know which one is smaller lexicographically. If they are the same, then we end up with a comparison between two suffixes that are already in the tree, and we know how to compare them! Just use their $$$tag$$$ value.

3.2. Getting the $$$lcp$$$ values

To get the $$$lcp$$$ value for the new suffix and for the node the goes after the new suffix (since its $$$lcp$$$ value might change), we will use the same trick: if the first characters of the suffixes are different, then their $$$lcp$$$ is zero. Otherwise, we are left with an $$$lcp$$$ query between two suffixes that are already in the quill, which we already know how to answer, just call the $$$\texttt{query}$$$ function.

Since now we know how to compare the new suffix with any other suffix in the tree, and how to get the $$$lcp$$$ between the new suffix and any other suffix in the tree, we are ready to insert a new suffix: just go down the tree to the correct position, insert the new suffix, compute its $$$lcp$$$ and recompute the $$$lcp$$$ of the next node in the tree.

4. Removing a character

Removing a suffix is relatively straightforward: go down the tree, using the $$$tag$$$ array, to find where the suffix we want to remove is. When found, just remove it using standard binary search tree algorithm.

Now note that the $$$lcp$$$ value of the node that comes after the removed node might be incorrect. Luckily, it is easy to get its correct value: it is the minimum between the current value and the $$$lcp$$$ value of the suffix we are removing. This follows from the fact that the $$$lcp$$$ between two suffixes is the minimum over the $$$lcp$$$ values of the range defined by them in the $$$SA$$$.

Note that I don't do the rebalancing/rebuilding stuff on the node deletion. This makes so the height of the tree won't always be logarithmic on the current size of the string, however it will still be logarithmic on the maximum size of the string so far, so this is not a problem.

5. Problems

I only know of one problem that makes sense using this data structure to solve it.

6. Final remarks

This data structure is relatively simple, and yet very powerful. Not even suffix trees and suffix automaton, that can update their structure with character additions, are capable of rollbacks without breaking their complexity. So this scores yet another point for suffix arrays being the best suffix structure.

This data structure is not too fast, but it is not super slow either. It runs in about 3 times the time of my static $$$\mathcal{O}(n \log n)$$$ suffix array implementation.

I would like to thank arthur.nascimento for creating the problem 103185M - May I Add a Letter?, which made me look into this data structure. Also tdas for showing me the existence of it.

References

Explanation of the data structure (without maintaining the $$$lcp$$$ array) (Chinese): Link

How do you guarantee that tags do not deplete?

That would only happen if we had a big path in the tree, that is, if the tree got too unbalanced. But from our grantee of the tree being somewhat balanced, this won't happen.

You can also think of the tag as being a mask for the path from the root to the node (so, for every bit, it would tell you if you need to go left or right). This would only break if the tree got too unbalanced. This is not how I implemented it, but the result is similar.

Wow, interesting. So you really need balancing to be strong guarantee and recalculate tags in the whole subtree if anything breaks...

Yes, exactly. I didn't mention it in the blog, but instead of using the $$$tag$$$ array you can use the $$$lcp$$$ query to compare two suffixes (and to compute the $$$lcp$$$ query without the $$$tag$$$s, you can use the $$$ISA$$$). This adds another $$$\log$$$ factor to the complexity, but it allows you to use other binary search trees. In fact, if you use some persistent binary search tree, so you can make the whole thing persistent.

The problem of assigning tags in this way is sometimes called the Order-maintenance problem. There are actually $$$O(1)$$$-amortized solutions to the order-maintenance problem. Lecture 8 of this course also discusses this problem briefly (and some related problems).

Just curious, Have you taken this course at MIT?

Yes, it was one of my favorite courses.

The actual performance and implementation of $$$O(1)$$$ idea will be sad, though. I'm not sure what is the best way to implement list order maintenance.

Amortized weight-balanced trees are usually known as scapegoat trees. It is a funny thing. You can actually maintain dynamic centroid decomposition in a similar way.

As far as I know, scapegoat trees refer more specifically to an optimization of amortized weight-balanced trees, saving memory at little or no runtime cost by avoiding the need to actually save subtree weights. Scapegoat trees would also work in this application.

EDIT: After playing around with it for a bit, I wasn't able to find a debit invariant that supports the persistence-related claims I previously made here. I may have mis-remembered something; either way, I am retracting those claims. (The curious can read the revision history and form their own judgements.)

I think we are talking about the same thing but just using different idioms. Your standard trick also applies to the scapegoat tree as I know it.