Hello Codeforces!

HackerEarth invites you to a unique Artificial Intelligence type contest — Bot Challenge. The contest will start on January 8, 3:30 PM MSK and will last for almost a week.

You have to build a bot that plays a two-player game (like Tic-tac-toe or Chess) with a bot built by another user. Your bot has to read what happens and play (print) its moves against the opponent. At the end of challenge, your bot will be played in a tournament against all other bots, and the bot that wins the tournament will be declared the winner.

Also, while the contest is running, you can challenge other people who have submitted their bots to play against you.

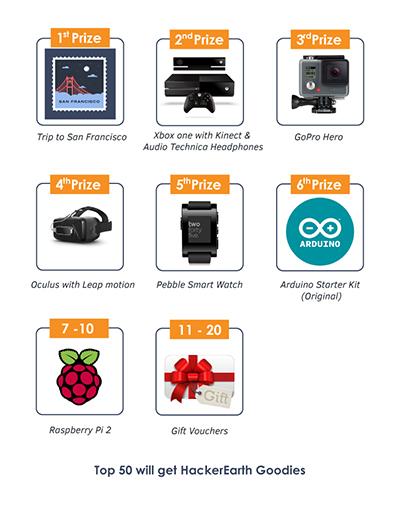

Register at https://www.hackerearth.com/bot-challenge-india-hacks-2016/ and fight for prizes:

You can practice in previous Bot contests(#1 and #2), to understand more about the problem statement and the format of the contest. Gullesnuffs won last two Bot challenges. Will you allow him to take the first place again?

You can check out this gameplay between Gullesnuffs and uwi in one of previous Bot challenges. Hit the replay button there and just watch it.

This challenge is a part of IndiaHacks 2016 tracks. Don't miss qualifications for the other track — algorithms. Check out date of the next qualification round at the link provided. I'm responsible for the final round (problems will be prepared by many setters though) — 24 hours, 10 problems, including an approximate one. And the same set of prizes!

Participate and have fun!

Contest has started!

"We will run intermediate tournaments at 0700 UTC everyday till the end of the contest."

As far as I understood the rules, these tournaments won't be counted towards our final score? The final ranking depends only on the last tournament?

There is "comments" section under the problem — you should ask there. I am involved in IndiaHacks but I'm not not organizing the Bot Challenge. Thus, I don't know many things. And I don't want to give you incorrect answer.

Gullesnuffs is leading the third bot challenge in a row. I don't think he's going down! :)

The rules are somewhat unclear — is it allowed to use external code? E.g. for this game, there is a very strong program Invader, so if someone gets access to its source code, he just wins, right?

Here is the link!

Good luck at decompiling of .exe :D

So, the contest ended, let's share approaches.

I've implemented MCST with pruned playouts (after 5 moves, playout is terminated, and the sign of heuristic scoring function is used to determine who wins). It also doesn't consider all moves, but only top f(number of playouts in the node) by heuristic score (f() is at most 400). I.e., depending on the number of playouts, some best moves are considered, the more playouts we already have — the more moves are considered. This helps to greatly reduce the size of the tree, especially at the beginning of the game.

The game seems to be very sensitive towards the heuristic scoring. I used the following: for each empty cell, compute distances (in queen moves) to the closest of our queens and to the closest of opponents queens (assuming that cells with arrows or queens are occupied). If our queen is closer, add 1 to the score, if opponent's queen is closer, subtract 1 from the score, otherwise (distances are equal) add or subtract 1/16 to the score depending on whose move is it.

Interesting observation is that the scoring function alone (w/o tree search) is around top-10 (as can be seen from my first submission). Also, it turns out that tree-search algorithms are very sensitive towards this function — e.g., changing 1/16 to 1 greatly reduces benefit of both MCST and Alpha-Beta. Maybe making this function smarter might help a lot.

I didn't have much time for this task (started only 3 days before the deadline, so only had couple of evenings), so I didn't tune parameters much, but my MCST parameters are:

My final version successfully plays against people below position 1 in the intermediate leaderboard (e.g., win/loss ratio vs. 2nd place is around 0.5, so I guess random will decide final the ranking there), but what surprises me is that my program consistently looses to the 1st place. Not sure how is it possible to achieve, according to this page, current state of art in game of amazons is MCST similar to mine. Maybe the key is to use smarter scoring function. But I really would be interested in learning Mårten Wiman's approach.

I had the same idea from the very beginning, but it gave me a different approach. I used neural network with two hidden layers (with 60 and 40 neurons) to estimate the position. The inputs of my NN were:

This gives a total of 3+8*13=107 inputs.

Here a square is controlled by a piece iff the square could be reached by the piece faster than by its opponents.

NN was learned entirely by self-play. After ~30,000 games it has exactly what it has on the leaderboard: 10th place and 2310 rating (which is, by the way, x1.5 bigger than mine!).

Some minor modifications:

floatare supreme here as compared todouble.Hm, interesting, if I understand correctly, cases with successful application of (non-trivial) Machine Learning to games are quite rare (notable exception is Backgammon; also, recently some good results were obtained for Go with CNNs, but they still are inferior to plain-old MCST).

You're saying you trained NN with self-play. How exactly did you do it? Was it reinforcement learning? Or rather temporal learning (i.e. fitting function f(position) so that f(position) ~= min max f(position after two moves), with positions sampled from self-play)?

By the way, all your features are structured. In such cases (when you have some meaningful features, not just a large array of data), NNs usually aren't the best choice. Did you try other Machine Learning approaches, e.g. boosted trees (can easily be trained with XGBoost)? On such features, it should produce higher-quality models compared to NN, and more importantly, these models would be faster to compute, so that some kind of tree search can be applied on top of the model.

Well, I agree with this. However, I should add that Matthew Lai's Giraffe engine used NN and reached International Master ranking. See more at arXiv: http://arxiv.org/abs/1509.01549

Disclaimer: it's my first ever real attempt to use machine learning anywhere, so I'm a machine learning n00b, and my arguments will probably be heavily criticized by red folks.

During self-play, engine chose the move that was most favored by NN (i. e., it made 1-ply search). After that, it launched backpropagating algorithm (with the only exception: instead of gradient I used trace value, which was updated as follows: trace' = λ × trace + gradient (λ is a decay rate, and it was equal to 0.6), so yes, it was a reinforcement learning.

No, I didn't :( After googling a bit, your idea about boosted trees seems interesting, though.

Finally, I'll share my code here: http://pastebin.com/6izY5X6f

Hm, the contest is finished, but it seems I cannot access source code of solutions of other people :( Let's hope Mårten Wiman reads this thread and posts his approach. BTW, last day was rather productive for other participants — 2nd place from the preliminary tournament is out of top-10 in the final tournament. So I would be interested in learning other people' approaches as well.

FWIW, my source code: http://pastebin.com/BYCN7aF4.

The main reason I took part in this contest was that I had heard of alpha-beta pruning and it sounded pretty cool and I wanted to learn it, so when I saw the announcement for this contest I decided to go for it. Unsurprisingly, I used alpha-beta pruning.

For any board state I gave it a score equal to the difference between the number of empty cells my queens were closest to and the number of empty cells my opponents queens were closest to.

Since the pruning alpha-beta is able to do is highly dependant on the order in which the moves are processed(or at least that is my understanding), I processed moves sorted by a metric which was efficient to compute and gave weightage to getting queens out of a tight spot and into emptier spaces and shooting arrows in positions that put my opponents queens in tight spots.

This along with some other heuristics was enough for the 10th place.

I too would like to see the approaches used by the top competitors. ilyakor has already introduced me to a new cool sounding term "Monte Carlo tree search" and I would like to see some more of these nice terms.

Here is my code: http://pastebin.com/16nsxEtY

The solutions will be made public soon. I can share Marten's last submitted code, till then. Here it is: http://pastebin.com/Zuty4CaM — I hope he sees this thread and lets us know things about his approach!

Thanks!

The solution looks like classic alpha-beta with a complex hand-crafted scoring function. I guess it takes lots of time, effort and understanding of the game to tune such a good function, so it's a well-deserved 1st place :)

I also ran some games against my bot (MCST with simple scoring) at different TLs (for both bots), and results are as follows:

So, the popular claim that MCST scales much better than alpha-beta (i.e. strength grows more with more computational resources) appears to be true in this case.

I used alpha-beta pruning together with various search optimizations, namely iterative deepening, the killer heuristic and transposition tables (although I tried some other ones as well, but they turned out not to be very useful).

I also used a quite complicated evaluation function, as you noted. Since the branching factor of the game is quite large, I thought that it was better to make an expensive but accurate evaluation function, rather than a cheap but inaccurate one.

My evaluation function tried to decide which regions "belonged" to which player. I more or less assumed that a square belonged to the player who could get there faster with one of their amazons, although I also accounted for the fact that it's better to be the only person who can use a square than just being able to get to that square one move faster than the opponent. I think most people did something similar to this.

However, my evaluation function also considered the mobility of the amazons. It seemed like most people didn't realise how bad it was for them when one of their amazons got completely stuck in a small region in the beginning of the game, and my bot took advantage of that.

I also used a special end-game evaluation function to minimize the risk that my bot would fail to use all its squares (this part of the evaluation function was quite simple, it was based only on the number of connections between squares, which caused the bot to try and get rid of the squares on the border of a region first).

Finally, I used an evolutionary approach to decide the weights used in the evaluation function. There were about 20 parameters that needed to be set, and as I'm not a very good player myself (I hadn't seen the game before the contest), I didn't know what values to use, so I let my bot play against itself (it probably played about 10000 games, with a time limit of 0.1 s/move) to determine which values worked well.

As I can see, discussion has been restarted, so I'll share some big news here (both for the Go-playing world and AI world) here. On 27 January Google annonunced that their program AlphaBot has defeated Fan Hui, the French professional Go player born in China, in the even 5-game match on 19x19 board with score 5-0 (the best previous programs could do was to gain a small edge with a 4-stone handicap – a significant advantage, equivalent to rook handicap in chess). It used deep convolutional neural network.

Speaking of its strength: AlphaGo beats best previous programs (such as Zen) on a 4-stone handicap.

UPD I think we should get our popcorn ready for a big show. In March AlphaGo is going to play against the legendary Lee Sedol, Go master from South Korea, who is considered to be one of the strongest players in the world today. More about Lee Sedol: link link.

Note that AlphaGo is MCST, and CNNs are used for MC move policy (this CNN was trained on human games) and node priors (this CNN was obtained with reinforcement learning).

So the team combined two most promising approaches (integrating CNN to MCST) and obtained this super-strong program. To my knowledge no new ideas were introduced, though this is still an awesome achievement. For the record, whitepaper is here.