(I'm too lazy to write a post so I copied last year's, thanks pooty)

Hello, Codeforces community!

We are happy to invite you to participate in National University of Singapore (NUS) CS3233 Final Team Contest 2024 Mirror on Monday, April 15, 2024, at 09:15 UTC. CS3233 is a course offered by NUS to prepare students in competitive problem solving.

The contest is unofficial and will be held in Codeforces Gym. Standard ICPC rules (Unrated, ICPC Rules, Teams Preferred) apply. The contest is unrated. Note the unusual starting time.

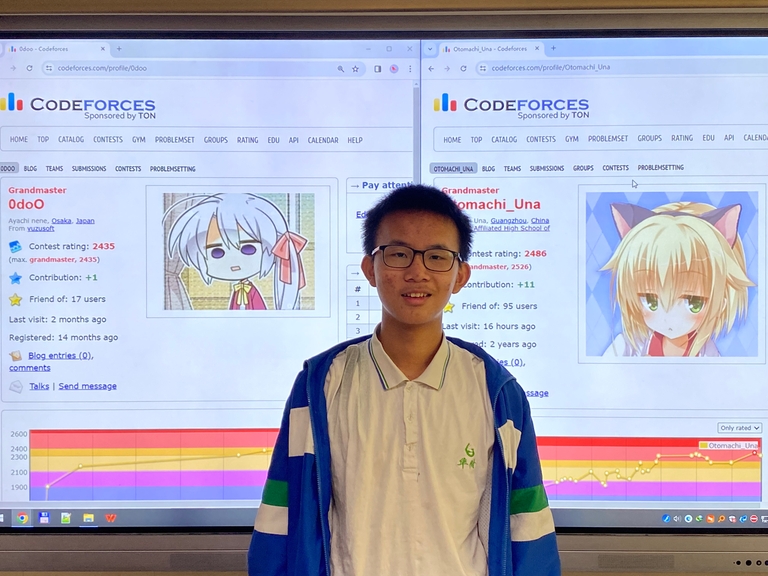

The problems are written and prepared by user202729_, rama_pang, yz_, benson1029, HuaJun, and feeder1.

We would also like to thank:

- Prof. Steven Halim for coordinating and teaching the CS3233 course;

- benson1029 and HuaJun as teaching assistants;

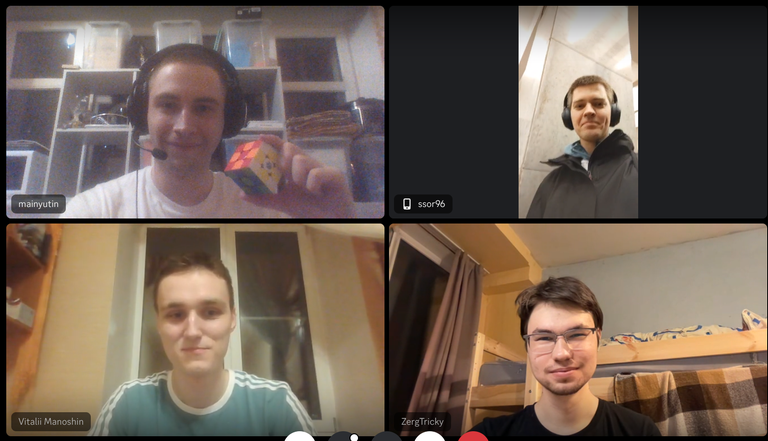

- stack.py (Pyqe, hocky, faustaadp), and Berted for testing the contest;

- errorgorn for existing;

- the Kattis team for the platform used in the CS3233 course and in the official contest;

- Codeforces Headquarters for letting us announce on the main page; and finally

- MikeMirzayanov for the amazing Codeforces and Polygon platform!

The contest will last for 5 hours and consist of 13 problems. While it is preferred to participate in a team, individual participation is also allowed.

The problems themselves may be quite standard as they are targeted toward those who have just learned competitive problem solving. However, we have also included a few challenging problems for stronger teams, such as ex-IOI or ICPC participants taking part in the course as well. So, the participants can expect a good mix of problems of varying difficulty levels, that we hope can be educational!

We hope you will enjoy and have fun in the contest. Good luck!

UPD: The contest is over! Here is the editorial.