Last August, I signed up for the 300iq online course, hoping to improve my competitive programming skills. The course seemed promising at first, there was a group of ±20 people with from 1800 to 2400 ratings, but after completing two contests, Ildar (or someone who has access to his account) stopped responding to messages.

Many of us felt abandoned and frustrated by the lack of support. What's more, 300iq asked $100 in cryptocurrency to enroll in this course, adding to the disappointment of the experience. We've been waiting almost for a year for his response, but he still ignores us. As I know he still sells his course, so be aware of codeforces' biggest scammer!

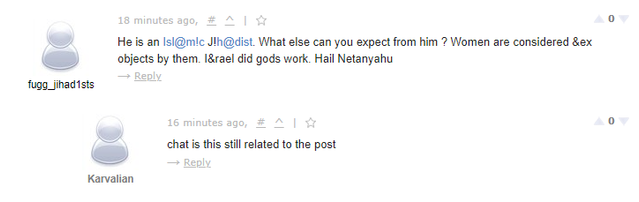

If someone needs evidence you can freely ask