Hi, all,

I with few other folks at NEAR work on teaching machines to program. A particularly exciting sub-project of that is teaching machines to solve competitive programming problems.

In this post I would like to give a quick overview of where the state of the art is today, what the major challenges are, why this is not a popular area of research, and how the CodeForces community can help to address some of the issues the program synthesis community is facing today.

We also have a certain budged allocated for this project, and we are paying to the CodeForces members who help us with some data annotation challenges. We have paid more than $10k in our first two annotation projects, and are launching three more projects today. Scroll to the end if you are interested.

Competitive programming as a benchmark

With the emergence of deep learning, neural networks started performing almost at a human level in many tasks: visual object recognition, translation between languages, speech recognition, and many other. One area where they haven't shown anything exciting yet is programming. I will talk about state of the art below, but overall neural networks are nowhere close today to doing actual coding.

But what would it even mean to solve programming? What would be a good benchmark and milestones to recognize? One such milestone is solving competitive programming. We are not talking here about building a bot that would perform at a tourist level, but at least solving A and B Division two would be super impressive. Even this is actually very hard for computers, and I will talk about why below in the Open Challenges section.

State of the art

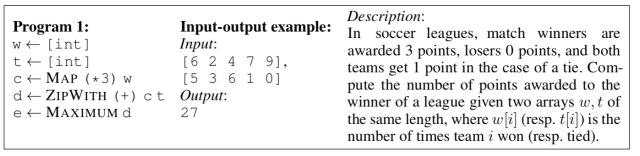

The area of research that is tasked with automated program generation is called Program Synthesis. The two most common sub-areas of it are programming from examples (synthesizing a program from few input/output examples of what it should do) and programming from description (synthesizing a program from an English description).

Programming from examples has a long history. One known example that you can run in your browser is Magic Haskeller, which is a very sophisticated system that synthesizes rather nontrivial Haskell programs from just one example.

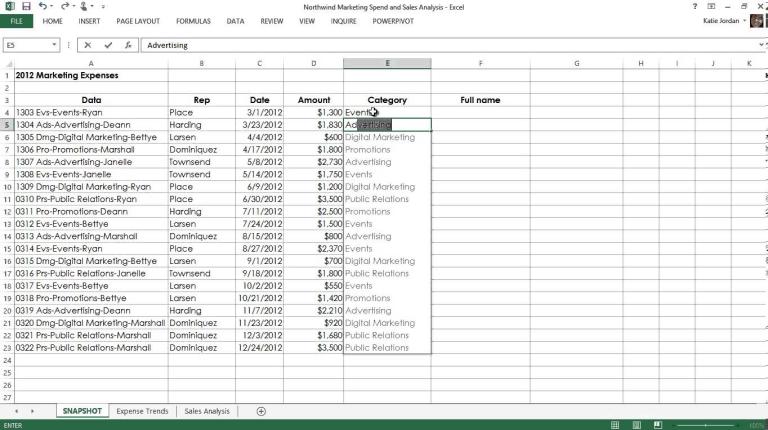

There were some applications of program synthesis from example in commercial products. Modern distributions of Excel come with a feature called FlashFill. If you have a column of names, and a column of last names, and enter an email in the third column in a form of "[email protected]", FlashFill will immediately synthesize a program that concatenates the first letter of the first name with a dot, the last name and the "@gmail.com" and suggest to auto-fill the rest of the column. This looks like magic, and took more than a year of work for a very strong team of program synthesis experts at Microsoft Research to deliver it.

Ultimately the lab that created FlashFill created a general purpose framework PROSE in which one can create services like FlashFill by describing a domain-specific language and writing few C# functions.

A separate epic paper from a different lab at MSR called TerpreT serves as a great review and comparison of different approaches to programming from examples. It compares using SMT solvers, linear programming and neural networks, and shows that as of today using more traditional approaches such as SMT solvers still noticeably outperforms deep learning approaches.

An early attempt to apply programming by example to solving competitive programming is a paper from the same lab called Deep Coder. It can synthesize short programs for very simple problems by seeing just a few examples, and uses a combination of both deep learning and several traditional approaches.

Another great inspiring example of applying program synthesis from examples is a paper called Chlorophyll, in which the technique is applied to optimizing assembly programs. It does it in the following way:

- Given a short program that needs to be optimized and a few tests (possibly zero), synthesize the shortest program that is consistent with the existing tests.

- Using an SMT solver, try to prove that the synthesized and the given programs produce the same output on all possible tests. If proven, the synthesized program is an optimized version of the given program. If disproved, the SMT solver would find a test on which the two programs produce different output. Add the test to the set of tests and go to (1).

Mindblowingly, not only this works, it generally converges for short programs in under 10 iterations!

Programming from description is a more recent area of research. Until the emergence of Deep Learning few years ago there was no sufficiently general way of extracting information from natural language. Arguably, even with the modern Deep Learning approaches natural language understanding is still at a pretty early stages, which is one of the major limitations.

There were papers that attempt to solve synthesis of SQL, BASH and Java from description, but they all face the challenges described below. To my best knowledge no application of synthesis from description appears in any commercial product today.

Who works on Program Synthesis?

Number of labs that work on program synthesis is actually rather small. Besides NEAR, here are several well-known ones:

Berkeley lab lead by Dawn Song

MIT lab lead by Armando Solar-Lezama

ETH Zurich lab (notably Veselin Raychev and Martin Vechev), which ultimately incorporated into DeepCode.ai

Microsoft Research has a long history of working on program synthesis. Here are two good publication aggregates: one and two.

Open Challenges

When we talk about programming from description, there are several large challenges, all remaining mostly unsolved as of today.

1. Lack of data

This might sound crazy -- GitHub has so much code that even just crawling all of it is a non-trivial task, how could the data be lacking? But as a matter of fact, the data that is readily available, such as GitHub or StackOverflow, is not immediately usable because it is very noisy. Here are some datasets that we considered, and what challenges they come with:

Small commits that fix a simple bug, and the text of the associated issue. The person who fixes a bug has a lot of context about how the code operates. This context varies drastically between projects, and without it even an experienced human expert would not be able to predict the change in the code given the issue text.

Commit messages and the code. Besides commits also depending significantly on the context (i.e. a human expert often won't be able to predict the code in the commit given a well-formed commit message), this dataset also has a lot of noise. People often provide incorrect commit messages, squash multiple features and fixes into one commit describing only one feature, or generally writing something like "Fixing a bug we found yesterday".

Docstrings and function bodies. This one was very promising, but has two issues: a) docstring describes how a function shall be used, not how it is implemented, and predicting code from the usage pattern is a harder task than predicting code from a task description; and b) even in very good codebases quality docstrings are usually only provided for the public APIs, and the internal code is generally way less documented.

On the other hand, there's also competitive programming archives. Competitive programming as a dataset has several advantages:

The code is short and self-contained.

No context besides the problem statement is needed to write it.

The dataset of statements and accepted solutions is very clean -- accepted solutions almost certainly solve the problem from the statement.

There are however some challenges:

While the number of solutions is very high (CodeForces alone has dozens of millions), the number of different problems is somewhat low. Our estimate suggests that the total number of different competitive programming problems in existence is somewhere between 200k and 500k, with ~50k being attainable with solutions when a reasonable effort is made. Only 1/3 of those problems are easy problems, so we are looking at having ~17k problems available for training.

The statements contain a lot of fluff. Alice and Bob walking on a street and finding a palindrome laying around is something I had in my nightmares on multiple occasions. This unnecessary fluff paired with relatively low number of problem statements further complicates training the models.

The lack of data is also the primary reason why program synthesis is not a popular area of research, since researchers prefer to work on existing datasets rather than figuring out where to get data from. One anecdotal evidence is the fact that after MetaMind published the WikiSQL dataset, multiple papers were immediately submitted to the coming conferences, creating a minor spike in program synthesis popularity, despite the fact that the quality of the dataset is very low.

Having said that, annotating the competitive programming dataset to a usable state would increase the popularity of the field, bringing closer the moment when we are competing against bots, and not just humans.

2. External Knowledge

Consider the following problem that was recently given on CodeForces: given an array of numbers of size 5000, how many triplets of numbers are there in the array such that the XOR of the three numbers is zero, and the three numbers can be the sides of a non-degenerate triangle.

This is Div1 A/B level, with most people with experience solving it easily. However from a machine perspective it is extremely hard. Consider the following challenges:

The model would need to realize that it doesn't need to fix all three numbers, it is sufficient to fix two numbers, and check if their XOR exists in the array. (this is besides the fact that the model would need to understand that fixing three numbers would not fit into timelimit)

The model would need to somehow know what does it mean for three numbers to be sides of a non-degenerate triangle.

Out of 50k problems that we have collected, only few had used that particular property of XOR in a similar way, and only a few dozen were in some way referring to the property of the sides of the triangle. To make a machine learning model be able to solve such a problem, one of the three things will need to happen:

We will find a way to learn from few examples. One-shot learning is a hot topic of research, with some recent breakthroughs, but no existing approach would be immediately applicable here.

We will find a way to provide the model with some external knowledge. This is an area that hasn't been researched much, and even figuring out how that knowledge shall be represented is a challenging research task.

We will find a way to augment the dataset in such a way that a single concept that occurs only a few times in the dataset instead appears in a variety of contexts.

All three of those are open challenges. Until they are solved, we can consider an easier task: solving a competitive programming problem that is (either initially, or by the means of rewriting) very close to the solution, e.g:

"Given an array of numbers, are there two numbers a and b in that array such that c = a XOR b also appears in the array, and the largest number among a, b and c is smaller than the sum of the remaining two?"

Can we solve it? With the modern technology not yet, and it makes sense to learn how to solve such problems before we even get to the more complex one-shot learning part.

3. Inherent uncertainty in Deep Learning models

Deep Learning models by design are probabilistic. Each prediction they make has some certainty associated with it, and therefore they generally keep having some chance of making a mistake even when trained well. If a model predicts code one token at a time, and a code snipped comprises 200 tokens, the model with 99% per-token accuracy would mess up at least one token with probability 87%. In natural language translation this is acceptable, but in Program Synthesis messing up one token most likely leads to a completely wrong program.

Notably, more traditional approaches from code synthesis (used primarily for programming by examples) don't suffer from the same problem. Marrying classic programming languages approaches with deep learning approaches is very desirable, but at this time very little success was achieved.

Is it NEAR?

At NEAR, we are attempting to address many of the above problems. Here I will shortly cover our efforts that are relevant to solving competitive programming.

Data

As I mentioned above, even though competitive programming data is rather clean, it has some unnecessary fluff in the statements that we'd love to get rid of. For that we asked the community to rewrite problem statements in such a way that only a very formal and dry description of what exactly needs to be done is left.

We also used help of the community to collect a dataset of statement-solution pairs in which no external knowledge (such as properties of XOR or triangles) is needed to write the solution given the statement. We plan to release a large part of this dataset during NAMPI 2018, so stay tuned.

This dataset is a set of problems with statement super close to the solution. Such problems are easier for a machine than even the simplest real problems on a real contest, but this dataset not only serves as the first milestone (being able to solve it is a prerequisite to solving more complex problems), it is also a good curriculum learning step -- a model that was taught to solve such problems is expected to pick up solving more complex problems more easily than a model that was not.

Data augmentation

To augment the dataset above, we created an algorithm that gets a solution as an input, and produces a very low level statement (very close to the solution) as an output. The generator is built in such a way that the statements generated are close in terms of certain metrics to what people have written. Training on such generated dataset achieves a non-zero accuracy on the human generated dataset, and the closer we get the generated statements to what people write in terms of the measurable metrics (such as BLEU score), the better the accuracy of the trained model on the human-generated set is.

"Non-zero accuracy" might not sound very impressive, but if you consider the fact that it effectively means that the model manages to learn how to solve some (albeit very simple) human-generated problems by only being trained on a synthetic data, it is actually very promising, and is a huge step towards solving more complex problems, and ultimately solving actual contest problems on a live competition.

Fixing the uncertainty of Deep Learning models

At the core of our approaches are deep learning models that read in text and produce code as either a sequence of tokens, or an AST tree. Either way, as mentioned above, even a very well trained model has a very high chance of messing up at least one token.

Trying to address this problem is on its own very close to what competitive programmers do. We explore quite a few approaches. We presented one such approach in our ICLR workshop paper -- the idea is to perform a beam search on AST trees until we find an AST of a program that passes sample tests. By the time we were publishing that paper we didn't have a good human-annotated dataset yet, so all the results were presented on a semi-synthetic dataset, but the model itself was not changed much since then, and we still use it on the human-annotated data today.

Running beam-search in the tree space is pretty slow and memory-consuming, and requires some tricky optimizations, such as using persistent trees, precomputing results on the go and others. Anyone knows a good place where I can find people who are good at writing complex algorithms on trees and who'd like to join a Silicon Valley-based VC-funded company that teaches machines to write code?

Another approach we researched is letting another (or the same) deep learning model to fix the program if the first program that was generated doesn't pass the samples. The high level idea is to train a model that takes as an input a problem statement and a program from the previous iteration alongside with some feedback on why the program is wrong, and generates a new program (either from scratch, or by producing a diff). We have a publication that reports some early results that was also accepted as a workshop paper at ICLR 2018.

To get to some more interesting ideas, let's consider us asking the model to implement a binary search. Imagine that it mistakenly swaps lines left = middle and right = middle. If you then feed the code back to the model, spotting this bug would be extremely hard. Even for humans it is a non-trivial task. So is the original source code the best medium to feed back to the model? One option we have been exploring is feeding an execution trace instead. If the model made the mistake above, the execution trace would always converge to one of the ends of the range instead of some value in the middle, which would provide way more information to the model than just the code itself. The challenge, however, is that execution traces of real programs are rather long, and condensing them to only show information that is likely to be relevant is an open and interesting challenge.

How can community help

If you find the concept interesting, and would like to help, there are (at least) two ways you can do it:

1. Help us annotate more data (and get paid for that!)

We run a labeling platform at

Where we ask people to help us rewrite statements or annotate solutions. We have paid out more than $10k in the last few months, and are launching three new tasks there today. The invitation code to register is NEAR.

We particularly and desperately need people who speak Japanese or Romanian, as well as soon will need people who speak Mandarin.

You need to have sufficient written English skill to write statements that are understandable by English speakers to participate.

2. Help us get more solutions

If you were solving problems on informatics.mccme.ru, and would be willing to share your account with me to crawl it, that would provide a lot of value. The website has great intro level problems, and presently we have no solutions for them.

We also look for accounts on Timus, SPOJ, acmp.ru and main.edu.pl / szkopul.edu.pl.