Some newbie messaged me with asking for help on this article https://www.geeksforgeeks.org/queries-for-number-of-distinct-elements-in-a-subarray-set-2/.

Can someone explain why time complexity is O(log n) and not O(n)?

Please read it once before downvoting. It is an advanced data structure, that is not well known.

.

Yes I can see that. I don't understand the part where he merges sets in $$$O(1)$$$

.

I think you are right, it's not $$$O(\log n)$$$. They explicitly build set with all disctinct elements in the segment, and it obviously can contain $$$n$$$ elements for a single query. And you can't create a set of $$$n$$$ elements in $$$o(n)$$$ time.

Actually, it is not even $$$O(n)$$$. It is at least $$$O(n \log n)$$$, maybe even more, because they blindly merge sets, and I don't want to analyze the worst case :)

I thought putting $$$O(n)$$$ elements in set is $$$O(n)$$$.

Well, you can sort elements, putting them in a set, and then going through set, so it can't be $$$O(n)$$$, if you put them in a random order.

Another thing is if you use

unordered_set, then you can indeed achieve $$$O(n)$$$. But on the next line they writeFor this purpose we can use a self-balancing BST or “set” data structure in C++.

So it's not the case

How do you quote though?

i think the writer is wrong! because the segment building is O(n * logn * logn) because every element has to insert in at least logn sets. if you think that in [L, R] we have k elements, these k element can be in at most logn sets and if in the merging of the set he insert the little set into the bigger set it could be done in O(k * logn * logn * logn). btw dont always trust to GFG.

Don't trust any article that uses real values to compute a power of two:

h = (2 * (pow(2, h))) - 1;Damn! Destruction 100 XD

I think there is also something similiar to this known as Merge Sort tree, where in segment tree node you store the whole range that the node is responsible for, but in sorted order.

This let's you answer queries of form $$$ \{L,R,X,Y \} $$$ online in $$$ O( log^{2}(N) ) $$$, where you need to find number of values of array $$$A$$$ between $$$[L,R]$$$ such that $$$ X \le A[i] \le Y $$$.

The build phase takes more than $$$O(N)$$$ operations as for a usual segment tree. So their approach is correct?

EDIT: Ah, but you still need to merge sets in query too. My bad, I thought we could just return the set sizes and add them for a query in which we have to go down.

GeeksForGeeks publishing wrong information and articles, same old story.

Same old story, Ricks killing Mortys.

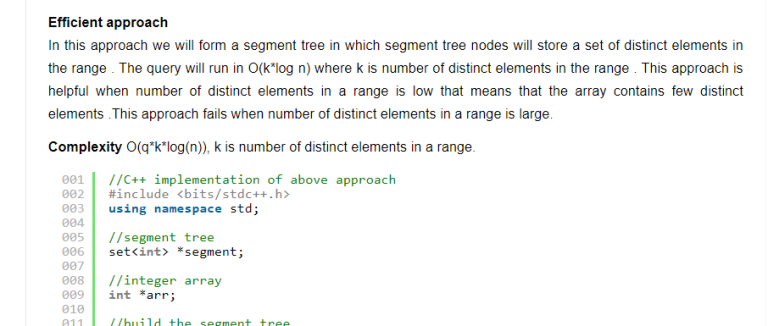

Hi, I am the author of the post in GFG. I would like to say that when we submit a article it is reviewed by a reviewer who can change any statement or complexity or code. I submitted a draft and it was changed by the reviewer and then published. It might be changed by other person's too after publishing the article ( as GFG have article improvement authors too). As I am being made fun of in this blog ( obviously my name is attached to the bottom of article and that is why i came to know). I searched for the draft and took a screenshot of the draft. Here what i submitted "Efficient approach In this approach we will form a segment tree in which segment tree nodes will store a set of distinct elements in the range . The query will run in O(k*log n) where k is number of distinct elements in the range . This approach is helpful when number of distinct elements in a range is low that means that the array contains few distinct elements .This approach fails when number of distinct elements in a range is large.

Complexity O(q*k*log(n)), k is number of distinct elements in a range." here what i might say i am wrong is K = n. so i accept it that it would be better if i write O(q*n*log n).

And we are often asked to write our articles using codes that are present in GFG such as https://www.geeksforgeeks.org/segment-tree-set-1-sum-of-given-range/ so my segment tree code looks similar to it.

I am not saying that is the most efficient but i am not totally responsible for the claims made in the article. I am sorry if anyone has faced any problem due to this.

Thanks for replying. I'm not sure why this edit was made by gfg moderation. I don't think so they should be able to edit your post without your approval. But then again, GFG does a lot wrong. I would recommend to not post there as their moderation system is really bad. I don't know how the moderation team edited a post without the author's approval.

A small note about the time complexity which you might have missed. Consider a query from 1 to $$$n-1$$$. You will be merging disjoint sets of $$$2^i$$$ for $$$i = 1$$$ to $$$\log_2(n)$$$. Now consider that each set has at most $$$min(k, 2^i)$$$ elements. So you will merge $$$\sum min(k,2^i)$$$ elements, where each merge will take $$$O(\log k)$$$ time, so you will merge approximately $$$O(k) + O(k(\log n - \log k))$$$ elements. so time complexity is actually $$$O(k\log (n/k) \log k)$$$. So you can correct your article with this.

Actually I want to be clear with one more thing. I didn't exactly mean to make fun of you for making mistakes(At least what I assumed to be one), as you have written in your post. It's not like I wouldn't make mistakes. I don't like how gfg moderates its website, allowing a few mistakes, or even making it completely wrong in this case. Their job is to correct mistakes authors might have made, which is what they are unable to do.

Another such blog — https://www.geeksforgeeks.org/minimum-edges-required-to-make-a-directed-graph-strongly-connected/

If anyone want to write a correct solution, submit to this first.