Part 1: [Tutorial] My way of understanding Dinitz's ("Dinic's") algorithm

Part 2: [Tutorial] Minimum cost (maximum) flow

Part 3: [Tutorial] More about minimum cost flows: potentials and Dinitz

Introduction

As mentioned in the introduction of part 2, there is something in out ICPC notebook called "min cost dinic". This cycle (no pun intended) of blogs was initially meant to be just one blog explaining it all, but in time it developed into something much longer. The general idea here doesn't appear to be particularly widely discussed, but it is certainly not novel either; TopCoder, for example, calls it the primal-dual algorithm (why is it called that? That's a story for another time, but it has to do with LP duality.)

Picking up from where we left off, there are still two things to talk about:

- Where does Dinitz come in?

- Something called "potentials".

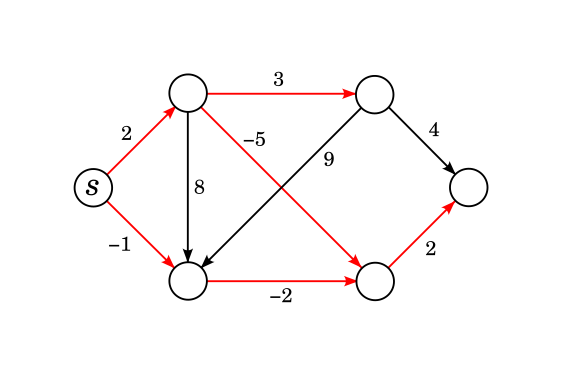

Even more generally, recall the "universal max-flow algorithm template" from part 1.

Algorithm 1. Given a flow network $$$(G, c, s, t)$$$.

- Initialize $$$f$$$ by setting $$$f(e) \gets 0$$$ for all edges. This is a valid flow.

- While $$$t$$$ is reachable from $$$s$$$ in $$$G_f$$$:

-

- Find some flow $$$\Delta f$$$ in $$$G_f$$$.

-

- Let $$$f \gets f + \Delta f$$$.

- Return $$$f$$$.

Our aim is to prove the correctness of the following generalization.

Algorithm 2. Given a flow network $$$(G, c, a, s, t)$$$ without negative cycles.

- Initialize $$$f$$$ by setting $$$f(e) \gets 0$$$ for all edges.

- While $$$t$$$ is reachable from $$$s$$$ in $$$G_f$$$:

-

- Let $$$S$$$ be the shortest-paths subgraph of $$$G_f$$$.

-

- Find some flow $$$\Delta f$$$ in $$$S$$$.

-

- Let $$$f \gets f + \Delta f$$$.

- Return $$$f$$$.

A special case of this is if we use "maximum" instead of "some".

Again thanks to adamant and brunovsky for reviewing, as the two blogs used to be just one. $$$~$$$

From paths to subgraphs

The theory laid out in part 2 paves the way for proving the correctness of algorithm 2.

Definition 3. Let $$$(G, c, a, s, t)$$$ be a flow network without negative cycles. Denote by $$$\mathrm{dist}(u, v)$$$ the cost of the shortest path from $$$u$$$ to $$$v$$$. The shortest-paths subgraph of $$$G$$$ consists of all such edges $$$e$$$ from $$$u$$$ to $$$v$$$ that

The shortest-paths subgraph represents the space of all possible shortest paths from $$$s$$$. Pick any shortest path from $$$s$$$ and all of its edges will be in this subgraph. Conversely, pick any path starting from $$$s$$$ in this subgraph and it will be a shortest path.

Now that we have all necessary definitions for correctly stating algorithm 2, we can prove its correctness. The strategy here is to use the paths-and-cycles decomposition to reduce the algorithm to already proven ones.

Observation 4. Let $$$(G, c, a, s, t)$$$ be a flow network without negative cycles. Let $$$S$$$ be the shortest-paths subgraph of $$$G$$$ and $$$f$$$ a flow on $$$S$$$. Then $$$G_f$$$ is without negative cycles.

Therefore at any stage of the algorithm 2, $$$G_f$$$ is without negative cycles, or equivalently, $$$f$$$ is minimum cost for its value.

We can now specialize algorithm 2 to the case of Dinitz.

Algorithm 5. Given a flow network $$$(G, c, a, s, t)$$$ without negative cycles.

- Initialize $$$f$$$ by setting $$$f(e) \gets 0$$$ for all edges.

- While $$$t$$$ is reachable from $$$s$$$ in $$$G_f$$$:

-

- Let $$$S$$$ be the shortest-paths subgraph of $$$G_f$$$.

-

- Using Dinitz's algorithm, find a flow $$$\Delta f$$$ in $$$S$$$.

-

- Let $$$f \gets f + \Delta f$$$.

- Return $$$f$$$.

We may alternatively phrase it as such:

- Initialize $$$f$$$ by setting $$$f(e) \gets 0$$$ for all edges.

- While $$$t$$$ is reachable from $$$s$$$ in $$$G_f$$$:

-

- Let $$$S$$$ be the shortest-paths subgraph of $$$G_f$$$.

-

- While $$$t$$$ is reachable from $$$s$$$ in $$$S$$$:

-

-

- Construct the graph $$$D$$$ by doing a BFS from $$$s$$$

-

-

-

- Use the blocking-flow algorithm to find a flow $$$\Delta f$$$ in $$$D \subseteq S \subseteq G_f$$$

-

-

-

- Let $$$f \gets f + \Delta f$$$

-

- Return $$$f$$$

Note the "two-tiered" structure. The $$$D$$$ in Dinitz is also kind of like a shortest path subgraph. More precisely, we first find the shortest path subgraph $$$S$$$ with respect to edge costs, and then find $$$D$$$ in $$$S$$$ which is also kind of like a shortest path subgraph, except all edges have length 1 this time. You can in principle also find the blocking flow in $$$S$$$ directly, but I have no idea what that does to the complexity.

Potentials

To make algorithm 5 into something that you can actually submit, there is still more work to do. Working with negative weights is a must, so we need to actually develop an effective way to find the shortest-paths subgraph, which incidentally means finding shortest paths.

With negative edge costs, Dijkstra's algorithm won't work properly; the best "classical" method for finding shortest paths from $$$s$$$ is the Bellman-Ford algorithm which runs in quadratic time. However, there is a better way than finding shortest paths using Bellman-Ford every time.

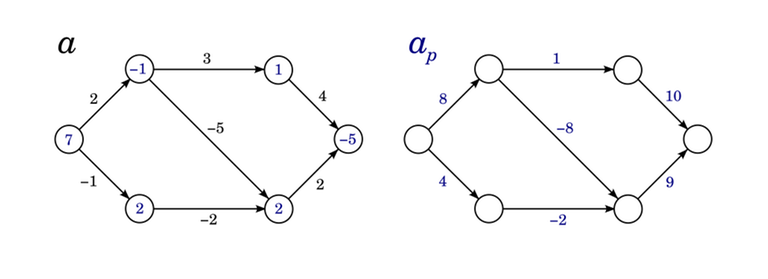

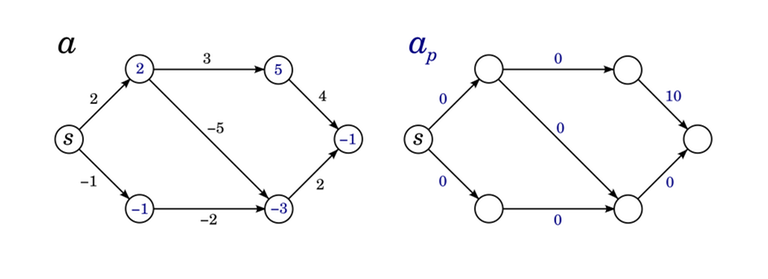

Let $$$G$$$ be a directed graph with edge costs $$$a \colon\, E \to \mathbb{R}$$$ and let $$$p \colon\, V \to \mathbb{R}$$$ be a function. Redefine the edge costs as such: for an edge $$$e$$$ from $$$u$$$ to $$$v$$$, let

In other words, for each edge, we add to its cost the difference of $$$p$$$ at its endpoints.

Consider some path $$$u_0 u_1 \cdots u_k$$$ in $$$G$$$ with edges $$$e_1, e_2, \ldots, e_k$$$. The cost of this path w.r.t. the edge costs $$$a_p$$$ is

In other words, for intermediate vertices, there is both a $$$+p(v)$$$ and a $$$-p(v)$$$ in the sum; they cancel out. Only the first and last one remain. That is, the cost w.r.t $$$a_p$$$ is the cost w.r.t. $$$a$$$ plus the difference of $$$p$$$ at its endpoints.

In particular,

Observation 6. Given a directed graph $$$G$$$, edge costs $$$a \colon\, E \to \mathbb{R}$$$ and a map $$$p \colon\, V \to \mathbb{R}$$$. For any two vertices $$$u$$$ and $$$v$$$, the shortest paths from $$$u$$$ to $$$v$$$ w.r.t. $$$a$$$ are exactly the shortest paths from $$$u$$$ to $$$v$$$ w.r.t. $$$a_p$$$.

This means that if we want to find shortest paths, we may as well take some $$$p$$$ and replace the edge costs that way, and it doesn't change the answer in any meaningful way. In particular, if we could choose $$$p$$$ in such a way that all edge costs become nonnegative, we could use Dijkstra's algorithm to find shortest paths. This motivates the following definition.

Definition 7. Given a directed graph $$$G$$$ and edge costs $$$a \colon\, E \to \mathbb{R}$$$, a function $$$p \colon\, V \to \mathbb{R}$$$ is called a potential if $$$a_p (e) \ge 0$$$ for all edges $$$e$$$.

The following observation will show that a potential always exists in the cases that we care about.

Observation 8. Given a directed graph $$$G$$$ and edge costs $$$a \colon\, E \to \mathbb{R}$$$ such that there are no negative cycles. Let $$$s$$$ be a vertex such that every vertex of the graph is reachable from $$$s$$$. Define $$$p(v)$$$ as the length of the shortest path from $$$s$$$ to $$$v$$$. Then $$$p$$$ is a potential.

The fact that there are so many 0-s is not a coincidence. Every edge on the shortest-path subgraph has a potential 0.

We won't need this here, but by adding an extra "supersource" vertex, this observation can be generalized to show that any graph without negative cycles admits a potential function. The converse is also true: if there is a negative cycle, there can't be a potential. Thus a potential function exists if and only if there are no negative cycles. By the way, there is an absolutely ingenious problem about potentials (not to do with flows) here (warning: reading the problem right now will spoil the solution).

Anyway, the idea for a potential-using minimum cost flow algorithm looks like this.

Algorithm 9. Given a flow network $$$(G, c, a, s, t)$$$ without negative cycles.

- Initialize $$$f$$$ by setting $$$f(e) \gets 0$$$ for all edges.

- Use Bellman-Ford to calculate a potential.

- While $$$t$$$ is reachable from $$$s$$$ in $$$G_f$$$:

-

- Use Dijkstra's algorithm and the current potentials to calculate the shortest-paths subgraph of $$$G_f$$$; let $$$S$$$ be this subgraph. Use the newly found shortest paths to get the new potentials.

-

- Using Dinitz's algorithm, find a flow $$$\Delta f$$$ in $$$S$$$.

-

- Let $$$f \gets f + \Delta f$$$.

- Return $$$f$$$.

To prove correctness, we can show the following.

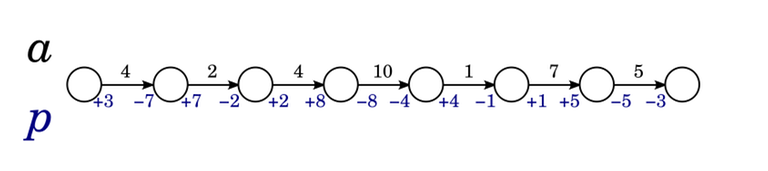

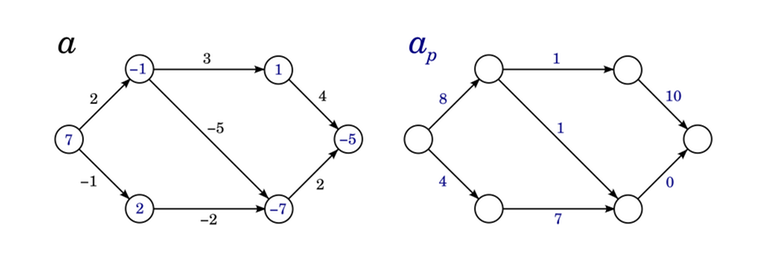

Observation 10. Let $$$G$$$ be a directed path with edge costs $$$a \colon\, E \to \mathbb{R}$$$ such that there are no negative cycles. Let $$$s$$$ be a vertex such that every vertex of the graph is reachable from $$$s$$$. Let $$$p \colon\, V \to \mathbb{R}$$$ be the shortest-path potential. Let $$$e$$$ be an edge in the shortest-path subgraph. Then:

- Suppose $$$e$$$ is removed. Then $$$p$$$ remains a valid potential.

- Suppose an edge $$$\overleftarrow{e}$$$ is added with cost $$$a(\overleftarrow{e}) = -a(e)$$$. Then $$$p$$$ remains a valid potential.

We can easily modify the proof to the case that one or both of these things happen to a lot of edges at once. Then if $$$p$$$ was the shortest path potential before, it's still a valid potential afterwards.

Note that the observation statement is fairly restrictive. The potential $$$p$$$ must be the shortest-path potential, but it is only a valid potential afterwards. This is why it is important to recalculate the potentials every time we run the algorithm.

By the way, I should clarify what I mean by "use the newly found shortest paths to get the new potentials". Let $$$p$$$ be the "old" potential on $$$G_f$$$ and assume $$$p(s) = 0$$$. If the true length of the shortest path of $$$s \leadsto u$$$ is $$$C$$$, then the length of the shortest path found using the potentials is $$$C - p(u)$$$. We set the new potential at $$$u$$$ to $$$C$$$ (not $$$C - p(u)$$$), or equivalently, we sum the old potential and the newly found length of the shortest path. Thus, the potentials at any given time are the "true" shortest-path potentials on $$$G_f$$$. In particular, the potentials will not grow exponentially, overflow under typical assumptions or anything like that. If $$$\left|c(e)\right| \le 10^9$$$ and $$$n \le 10^5$$$ (for example), the potentials won't exceed $$$10^{14}$$$ as a shortest path won't visit a vertex twice unless there is a negative cycle.

Now we can apply observation 10 to prove the correctness of algorithm 9. When we find the flow $$$\Delta f$$$, it is in the shortest-path subgraph of $$$G_f$$$. Since the potential $$$p$$$ was the shortest-path potential before, it is still a valid potential and thus we can run Dijkstra's algorithm. However, it is no longer a valid shortest-path potential, so we must recalculate the potentials at that point. The statement of observation 10 states that every vertex must be reachable from $$$s$$$, but we can get around that by treating vertices that are not reachable from $$$s$$$ as no longer present in the graph. There is no way we can send some flow from $$$s$$$ to $$$t$$$ such that they will become reachable again, so these vertices can be effectively forgotten about.

Summary

One last time, let's write out the algorithm with full details.

Algorithm 11. Given a flow network $$$(G, c, a, s, t)$$$ without negative cycles.

- Initialize $$$f$$$ by setting $$$f(e) \gets 0$$$ for all edges.

- Use Bellman-Ford to calculate a potential.

- While $$$t$$$ is reachable from $$$s$$$ in $$$G_f$$$:

-

- Use Dijkstra's algorithm and the current potentials to calculate the shortest-paths subgraph of $$$G_f$$$; let $$$S$$$ be this subgraph. Use the newly found shortest paths as the next potentials.

-

- While $$$t$$$ is reachable from $$$s$$$ in $$$S$$$:

-

-

- Construct the graph $$$D$$$ by doing a BFS from $$$s$$$

-

-

-

- Use the blocking-flow algorithm to find a flow $$$\Delta f$$$ in $$$D \subseteq S \subseteq G_f$$$

-

-

-

- Let $$$f \gets f + \Delta f$$$

-

- Return $$$f$$$.

As in the last blog, I won't go into details about the complexity. One thing to notice though is that every time the length of the shortest path changes, we have a new maximum flow problem. The classical solution to minimum cost flow solves this essentially by Ford-Fulkerson, we solve it by Dinitz, which means that we should be able to expect speedup similar to the speedup from Ford-Fulkerson to Dinitz in the maximum flow case.

Finally, code (note: see this comment to see what's missing).